Starlit City

A grant-winning independent Music Video project combining virtually embodied performance with a synchronous real-world live performance.

(Pictures and video will be updated early January after mocap sessions.)

Skills: Virtual Production, Motion Capture, Character Modeling, Audio Production, Set Design, Video Editing

Made with Unreal Engine, Marvelous Designer, Blender, After Effects, Rokoko Smartsuit, VIVE Tracker 3.0, DaVinci Resolve, Ableton Live

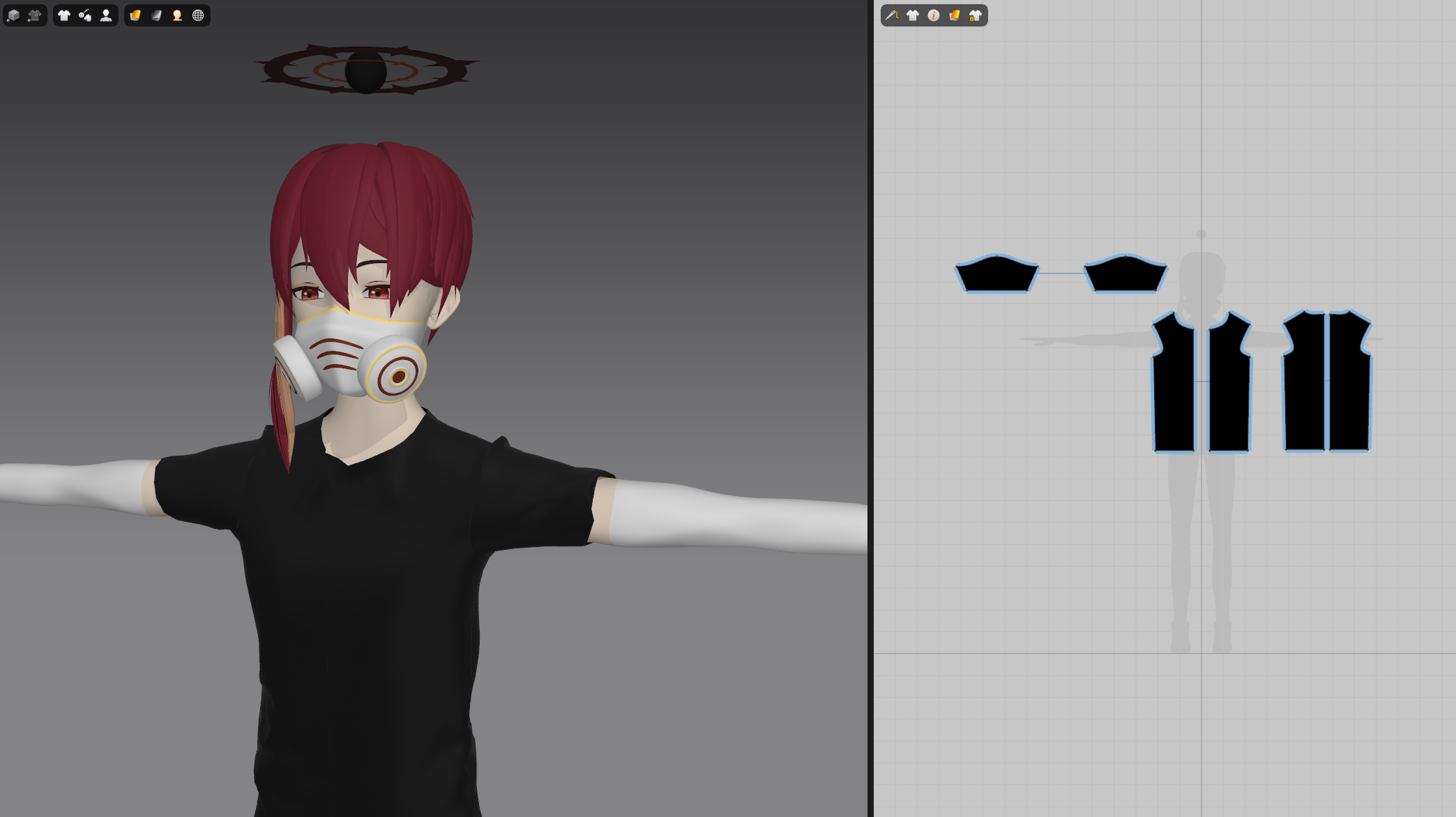

Virtual production workflow: Work-in-progress

Embrace the light

This 1-minute music video is an independent project which was started in September 2025 with the aim of combining both a virtually embodied avatar and real musician’s live performance into one seamless video. While virtual performances are not new, I wanted to incorporate more of the real-world performance alongside the virtual avatar and explore the new possibilities in choreography that open up when it’s possible to switch between the virtual and real performer.

The project features an original music composition I made and showcases the same live performance in both the real-world and Unreal Engine environment using a self-developed 3D virtual model. The avatar was modeled and rigged in Blender, while clothes were created in Marvelous Designer.

The live performance will be captured while wearing a Rokoko Smartsuit under the real-world garments to make the outfits match as closely as possible in both environments. The real and virtual performances will then be combined into a seamless viewing experience. Motion capture tests will begin in mid December and continue into January 2026.

This project was awarded the Northeastern University Art+Design 2025 Grant. The final music video combining both virtual and real performances will be linked upon completion in April 2026.